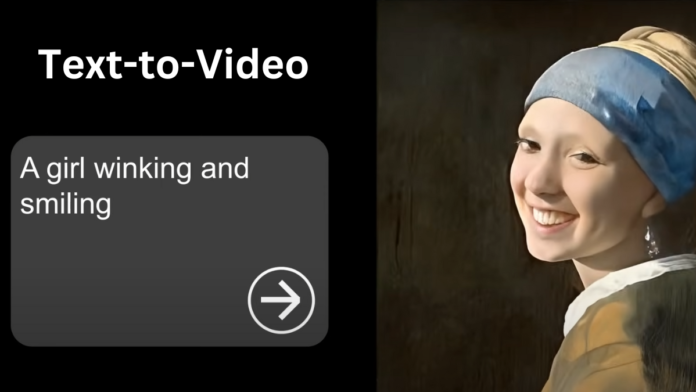

Google’s new Research: Lumiere. “A text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse, and coherent motion,” according to Google Research, is the latest text-to-video technology.

A lack of coherency is often the result of the way most text-to-video models now in use create key frames and then use other models to fill in the gaps. Lumiere circumvents this issue by “generating the full temporal duration of the video at once.”

The publication states, “We train our T2V model on a dataset containing 30 million videos along with their text captions,” which is disappointingly but understandably vague about the training data. The videos have 80 frames per second at a frame rate of 16 fps.

Particularly striking are the instances of “stylized generation” that pair a text prompt with one style reference image.

By processing data at different space-time scales and utilizing both spatial and temporal down- and up-sampling, Lumiere is able to produce full-frame rate, low-resolution videos with comparable features.

The Google Research project presents a novel space-time U-Net architecture that can produce an entire video’s temporal duration in a single model pass.

“We present Lumiere, a text-to-video diffusion model created to address a crucial issue in video synthesis: creating videos that depict realistic, varied, and coherent motion. The Google research team stated in their article, “To this end, we introduce a Space-Time U-Net architecture that generates the entire temporal duration of the video at once, through a single pass in the model.”

Google Reveals Lumiere Text-to-Video

Lumiere takes a different approach from other video models, which produce remote keyframes and then temporal super-resolution. This makes global temporal consistency more achievable.

The architecture combines a pre-trained text-to-image diffusion model with spatial and temporal down- and up-sampling. Lumiere can now handle the data at many space-time scales, enabling it to directly produce a full-frame-rate, low-resolution video.

Google Research’s text-to-video generating framework is a major advancement in video synthesis. Lumiere presents state-of-the-art text-to-video-generating outcomes by using a pre-trained text-to-image diffusion model and resolving intrinsic constraints in prior techniques.

Full-frame-rate video clips can be produced thanks to the novel space-time U-Net architecture, which has a variety of uses such as image-to-video and video inpainting, as well as the creation of styled content.

Model T2I

The study notes its limits, stating that Lumiere is not intended to produce videos with scenes that require transitions or several shots. The Google team states that further research on this topic is still lacking.

Furthermore, the model is based on a text-to-image (T2I) model that functions in pixel space, which means that producing high-resolution images requires a spatial super-resolution module.

Notwithstanding these drawbacks, Lumiere’s design ideas suggest new directions for text-to-video model development and have potential applications for latent video diffusion models.

Lumiere’s main goal is to enable inexperienced users to produce visual material in an inventive and adaptable manner. The possibility of abuse is acknowledged by the researchers, who stress the significance of creating instruments to identify biases and stop harmful use cases.

It is important to ensure that this technology is used responsibly, which highlights Google’s dedication to responsible AI development. In conclusion, Google’s Lumiere offers a fresh method for creating realistic and cogent motion in videos, marking a significant advancement in text-to-video AI production.

With an emphasis on usability and responsible implementation, the creative architectural and design ideas displayed in this project paved the way for developments in video synthesis technology.

“The main objective of this effort is to empower inexperienced people to produce visual material in an inventive and adaptable manner. However, there is a chance that our technology could be abused to produce false or damaging information; thus, in order to guarantee safe and equitable use, we think it is essential to create and implement tools for identifying biases and malicious use cases,” the researchers wrote in their conclusion.

Final Thoughts on Lumiere Text-to-Video Generator

So that is Google Lumiere. I mean, I do know the last video that Google put out; they’ve embellished a little bit on what it can actually do. I believe that this could probably get to what they’re saying. I know Google is literally spending all of their time focused on AI, and I know that the CEOs and the whole SE suite and everybody over there has literally said to the whole entire staff that their focus for 2024 is to literally make the best AI model out there. So, uh, I believe where they’re going. The question is, when are these tools going to get into our hands that we can play with and see for ourselves? That’s the thing I’m most excited about.

Also Read – What is the Samsung Galaxy AI? How is it beneficial for humans?